Azure Functions: Developer-Friendly Serverless

Published on

As I was wrestling with Lambda's CloudFormation templates for the third time this week, I felt a deep longing for Azure Functions. AWS Lambda is just not what serverless development is supposed to feel like. Don't get me wrong... I've deployed plenty of successful Lambda functions in production. But when I use Azure Functions for serverless development, the difference in developer experience is like switching from vim to VS Code. Sure, both get the job done, but one of them doesn't require memorizing arcane commands just to save a file.

As someone who's built enterprise solutions on both AWS and Azure, I want to share why Azure Functions is my go-to for serverless development, especially when developer productivity matters. By the end of this tutorial, you'll have built, debugged, and deployed your first Azure Function—and more importantly, you'll understand why the experience feels so different from other serverless platforms.

What Makes Azure Functions Different

Local Development That Actually Works

Remember the first time you tried to debug a Lambda function locally? If you're like me, you probably spent half a day setting up SAM CLI, configuring Docker, and still ended up testing in the cloud with console.log statements like it's 1999. Azure Functions Core Tools, on the other hand, just works.

Here's one thing I love about Azure Functions: I can hit F5 in VS Code, set a breakpoint in my TypeScript function, and debug it exactly like any other NodeJS application. No Docker containers spinning up, no CloudFormation templates to parse... just your code running locally with full debugging support.

Another helpful tool is Azurite. This local Azure storage emulator runs in the background and provides blob, queue, and table storage that behaves exactly like the real thing. Your function can read from queues, write to blobs, and interact with table storage, all locally. When you deploy, you just swap the connection string. It's suspiciously simple.

Language Support That Makes Sense

Azure Functions treats TypeScript as a first-class citizen. You don't need to transpile your code before deploying or set up complicated build pipelines. The platform handles it. Compare this to Lambda, where you're either shipping JavaScript or setting up a build process to compile TypeScript, then hoping your node_modules folder doesn't exceed the deployment package size limit.

Speaking of dependencies... remember Lambda Layers? That special circle of hell where you package your dependencies separately, deploy them as a layer, and pray the versions match what you developed against? Azure Functions keeps it simple and just uses package.json. Run npm install, deploy, done. Your dependencies are bundled with your function automatically. Revolutionary, right? 🙄

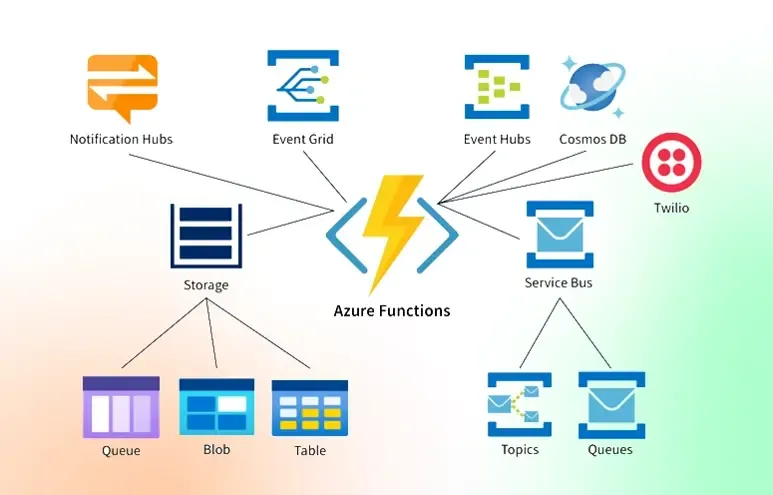

Triggers & Bindings Simplify Your Code

This is one of those areas where Azure Functions really shines. Instead of writing boilerplate code to read from a queue or write to a database, you declare your intentions in a configuration file, and the platform handles the plumbing.

Here's a taste of what I mean. In Lambda, reading from DynamoDB and writing to SQS looks something like this:

// Lambda: The manual approach

import AWS from 'aws-sdk';

const dynamodb = new AWS.DynamoDB.DocumentClient();

const sqs = new AWS.SQS();

export const handler = async (event) => {

try {

// Manually parse the incoming event

const data = JSON.parse(event.body);

// Manually read from DynamoDB

const result = await dynamodb.get({

TableName: process.env.TABLE_NAME,

Key: { id: data.id }

}).promise();

// Process the data

const processed = processData(result.Item);

// Manually send to SQS

await sqs.sendMessage({

QueueUrl: process.env.QUEUE_URL,

MessageBody: JSON.stringify(processed)

}).promise();

return {

statusCode: 200,

body: JSON.stringify({ success: true })

};

} catch (error) {

console.error(error);

return {

statusCode: 500,

body: JSON.stringify({ error: 'Internal server error' })

};

}

};In Azure Functions with bindings? It's declarative:

// Azure Functions: The declarative approach

import { AzureFunction, Context, HttpRequest } from '@azure/functions'

const httpTrigger: AzureFunction = async function (

context: Context,

req: HttpRequest,

inputDocument: any

): Promise<void> {

// The document is already loaded from Cosmos DB

const processed = processData(inputDocument);

// Just assign to the output binding

context.bindings.outputQueue = processed;

context.res = {

body: { success: true }

};

};

export default httpTrigger;The magic happens in function.json. You declare your inputs and outputs, and Azure Functions handles the rest. No SDK calls, no error handling for service connections, just your business logic.

Set Up Your Development Environment

Let's get you set up. I promise this will take less time than your last npm install on a large project.

Prerequisites

You'll need:

- VS Code with the Azure Functions extension (search for "Azure Functions" in the extensions marketplace)

- Azure Functions Core Tools v4 or later

- NodeJS 18 or later (we're using TypeScript, but NodeJS runs it all)

- An Azure account (the free tier is more than enough for learning)

Installation of Tools

Install via npm (recommended):

npm install -g azure-functions-core-tools@4Or, alternatively, on macOS or Linux:

# Install Azure Functions Core Tools

brew tap azure/functions

brew install azure-functions-core-tools@4

# Verify installation

func --versionOr on Windows with Chocolatey:

choco install azure-functions-core-toolsPro tip: If you're working with a team, create a Dev Container configuration. Your .devcontainer/devcontainer.json ensures everyone has the exact same environment:{

"name": "Azure Functions TypeScript",

"image": "mcr.microsoft.com/azure-functions/node:4-node18",

"features": {

"ghcr.io/devcontainers/features/azure-cli:1": {}

},

"extensions": [

"ms-azuretools.vscode-azurefunctions",

"dbaeumer.vscode-eslint"

]

}Building Your First Function

Enough setup, let's build something. We're creating a function that processes appointment data, validates it, and queues it for further processing. This is simplified from a real system I built for handling VA appointment scheduling, minus the PHI, of course.

Creating the Function Project

Open VS Code and hit Cmd+Shift+P (or Ctrl+Shift+P on Windows/Linux) to open the command palette.

- Type "Azure Functions: Create New Project"

- Choose your workspace folder

- Select "TypeScript"

- Select "Model V4"

- Choose "HTTP trigger" for your first function

- Name it "ProcessAppointment"

- Select "Anonymous" for auth (we'll add proper auth later)

VS Code just created your entire project structure. Let's see what we got:

your-project/

├── .vscode/ # VS Code configurations

├── ProcessAppointment/

│ ├── function.json # Function configuration

│ └── index.ts # Your function code

├── host.json # Global configuration

├── local.settings.json # Local environment variables

├── package.json # Dependencies

└── tsconfig.json # TypeScript configurationWriting the Actual Code

Let's replace the boilerplate with something useful. Open ProcessAppointment/index.ts:

import { AzureFunction, Context, HttpRequest } from '@azure/functions'

interface AppointmentRequest {

patientId: string;

providerId: string;

appointmentDate: string;

appointmentType: 'initial' | 'followup' | 'urgent';

notes?: string;

}

interface ProcessedAppointment extends AppointmentRequest {

id: string;

processedAt: string;

validationStatus: 'valid' | 'invalid';

validationErrors?: string[];

}

const httpTrigger: AzureFunction = async function (

context: Context,

req: HttpRequest

): Promise<void> {

context.log('Processing appointment request');

try {

// Parse and validate the request

const appointment: AppointmentRequest = req.body;

const validationErrors = validateAppointment(appointment);

const processed: ProcessedAppointment = {

...appointment,

id: generateId(),

processedAt: new Date().toISOString(),

validationStatus: validationErrors.length === 0 ? 'valid' : 'invalid',

validationErrors: validationErrors.length > 0 ? validationErrors : undefined

};

if (processed.validationStatus === 'valid') {

// Queue for further processing

context.bindings.appointmentQueue = processed;

context.res = {

status: 200,

body: {

message: 'Appointment queued successfully',

appointmentId: processed.id

}

};

} else {

context.res = {

status: 400,

body: {

message: 'Validation failed',

errors: validationErrors

}

};

}

} catch (error) {

context.log.error('Error processing appointment:', error);

context.res = {

status: 500,

body: {

message: 'Internal server error',

error: process.env.NODE_ENV === 'development' ? error.message : undefined

}

};

}

};

function validateAppointment(appointment: AppointmentRequest): string[] {

const errors: string[] = [];

if (!appointment.patientId) {

errors.push('Patient ID is required');

}

if (!appointment.providerId) {

errors.push('Provider ID is required');

}

if (!appointment.appointmentDate) {

errors.push('Appointment date is required');

} else {

const date = new Date(appointment.appointmentDate);

if (date < new Date()) {

errors.push('Appointment date must be in the future');

}

}

if (!['initial', 'followup', 'urgent'].includes(appointment.appointmentType)) {

errors.push('Invalid appointment type');

}

return errors;

}

function generateId(): string {

return `APT-${Date.now()}-${Math.random().toString(36).substr(2, 9)}`;

}

export default httpTrigger;Now update ProcessAppointment/function.json to add an output binding for the queue:

{

"bindings": [

{

"authLevel": "anonymous",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": ["post"]

},

{

"type": "http",

"direction": "out",

"name": "res"

},

{

"type": "queue",

"direction": "out",

"name": "appointmentQueue",

"queueName": "appointment-processing",

"connection": "AzureWebJobsStorage"

}

]

}The "It Just Works" Moment

Ready for the magic? Hit F5.

VS Code starts the Azure Functions runtime, attaches the debugger, and gives you a local URL. You'll see something like:

Azure Functions Core Tools

Core Tools Version: 4.0.5455

Function Runtime Version: 4.27.5.21554

Functions:

ProcessAppointment: [POST] http://localhost:7071/api/ProcessAppointmentOpen another terminal and test it:

curl -X POST http://localhost:7071/api/ProcessAppointment \

-H "Content-Type: application/json" \

-d '{

"patientId": "PAT-12345",

"providerId": "DOC-67890",

"appointmentDate": "2025-09-01T10:00:00Z",

"appointmentType": "initial",

"notes": "First consultation"

}'Set a breakpoint on line 25 (where we validate the appointment) and send the request again. The debugger stops right there. You can inspect variables, step through code, and watch the execution flow—just like any other NodeJS application. Try doing that with a Lambda function without pulling your hair out.

Local Development Deep Dive

Configure Local Settings

The local.settings.json file is where your local configuration lives. It's automatically excluded from source control (check your .gitignore), which is exactly what you want:

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"FUNCTIONS_WORKER_RUNTIME": "node",

"AzureWebJobsFeatureFlags": "EnableWorkerIndexing",

"CosmosDBConnection": "AccountEndpoint=https://localhost:8081/;AccountKey=C2y6yDjf5/R+ob0N8A7Cgv30VRDJIWEHLM+4QDU5DE2nQ9nDuVTqobD4b8mGGyPMbIZnqyMsEcaGQy67XIw/Jw==",

"ServiceBusConnection": "Endpoint=sb://localhost.servicebus.windows.net/;SharedAccessKeyName=RootManageSharedAccessKey;SharedAccessKey=local",

"APP_ENVIRONMENT": "development",

"LOG_LEVEL": "debug"

},

"Host": {

"LocalHttpPort": 7071,

"CORS": "*"

}

}The beautiful part? These settings map directly to Application Settings in Azure. No transformation, no mapping files. The same process.env.CosmosDBConnection works locally and in production.

Debugging Like a Real Application

Let's add a more complex function to see debugging in action. Create a new function that reads from the queue we're writing to:

func new --name ProcessQueuedAppointment --template "Azure Queue Storage trigger"Update ProcessQueuedAppointment/index.ts:

import { AzureFunction, Context } from '@azure/functions'

interface QueuedAppointment {

id: string;

patientId: string;

providerId: string;

appointmentDate: string;

processedAt: string;

}

const queueTrigger: AzureFunction = async function (

context: Context,

appointment: QueuedAppointment

): Promise<void> {

context.log('Processing queued appointment:', appointment.id);

try {

// Simulate some async processing

await validateProvider(appointment.providerId);

await checkPatientEligibility(appointment.patientId);

await scheduleAppointment(appointment);

// Write to Cosmos DB (via output binding)

context.bindings.appointmentDocument = {

...appointment,

status: 'scheduled',

scheduledAt: new Date().toISOString()

};

context.log('Successfully scheduled appointment:', appointment.id);

} catch (error) {

context.log.error('Failed to process appointment:', error);

// Dead letter the message by throwing

throw error;

}

};

async function validateProvider(providerId: string): Promise<void> {

// Simulate API call

await new Promise(resolve => setTimeout(resolve, 100));

if (!providerId.startsWith('DOC-')) {

throw new Error(`Invalid provider ID: ${providerId}`);

}

}

async function checkPatientEligibility(patientId: string): Promise<void> {

// Simulate database lookup

await new Promise(resolve => setTimeout(resolve, 150));

// Random eligibility check for demo

if (Math.random() > 0.9) {

throw new Error(`Patient ${patientId} is not eligible for appointment`);

}

}

async function scheduleAppointment(appointment: QueuedAppointment): Promise<void> {

// Simulate external scheduling system

await new Promise(resolve => setTimeout(resolve, 200));

context.log(`Appointment ${appointment.id} scheduled for ${appointment.appointmentDate}`);

}

export default queueTrigger;With both functions running locally, you can trace an appointment from HTTP request through queue processing. Set breakpoints in both functions and watch the flow. This is the kind of debugging experience that makes you actually enjoy working with serverless.

Working with Storage Locally

Azurite is already running if you started your functions (Core Tools starts it automatically). But let's make sure everything's connected properly.

Install the Azure Storage Explorer if you haven't already—it's free and works with local storage. Connect to your local storage:

- Right-click "Storage Accounts"

- Select "Connect to Azure Storage"

- Choose "Local storage emulator"

Now you can see your queues, blobs, and tables. When your function writes to the queue, you'll see the messages appear in real-time. It's incredibly satisfying to watch your local functions interact with local storage that behaves exactly like production.

For Cosmos DB, grab the emulator from Microsoft. Once it's running, your functions can connect using the connection string in local.settings.json. The data explorer (https://localhost:8081/_explorer/index.html) lets you query your local data just like in Azure.

Deploying to Azure

Alright, our function works locally. Time to ship it. I'll show you three ways, from "I need this deployed NOW" to "I want a proper CI/CD pipeline."

Method 1: The Right-Click Deploy

In VS Code:

- Open the Azure extension (the Azure icon in the sidebar)

- Sign in to your Azure account

- Right-click on "Function App" and select "Create Function App in Azure"

- Follow the prompts (unique name, Node.js 18, region near you)

- Once created, right-click your local project and select "Deploy to Function App"

That's it. Your TypeScript is compiled, your node_modules are packaged, your function is deployed. The first time I did this after wrestling with serverless.yml and CloudFormation, I literally said "that's it?" out loud to an empty room.

Method 2: Azure CLI

# Login to Azure

az login

# Create a resource group

az group create --name rg-appointment-functions --location eastus2

# Create a storage account (required for any Function App)

az storage account create \

--name stappointmentfuncs \

--location eastus2 \

--resource-group rg-appointment-functions \

--sku Standard_LRS

# Create the Function App

az functionapp create \

--resource-group rg-appointment-functions \

--consumption-plan-location eastus2 \

--runtime node \

--runtime-version 18 \

--functions-version 4 \

--name func-appointments-prod \

--storage-account stappointmentfuncs

# Deploy your code

func azure functionapp publish func-appointments-prodThe Azure CLI is surprisingly pleasant to use. Autocomplete works, the commands make sense, and the error messages actually help you fix problems.

Method 3: GitHub Actions

Create .github/workflows/deploy.yml:

name: Deploy to Azure Functions

on:

push:

branches: [main]

pull_request:

branches: [main]

env:

AZURE_FUNCTIONAPP_NAME: func-appointments-prod

AZURE_FUNCTIONAPP_PACKAGE_PATH: '.'

NODE_VERSION: '18.x'

jobs:

build-and-deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version: ${{ env.NODE_VERSION }}

- name: Install dependencies

run: |

npm ci

npm run build --if-present

npm run test --if-present

- name: Upload to Azure

uses: Azure/functions-action@v1

with:

app-name: ${{ env.AZURE_FUNCTIONAPP_NAME }}

package: ${{ env.AZURE_FUNCTIONAPP_PACKAGE_PATH }}

publish-profile: ${{ secrets.AZURE_FUNCTIONAPP_PUBLISH_PROFILE }}Get your publish profile from the Azure Portal (Function App → Get publish profile) and add it as a GitHub secret. Push to main, and your function deploys automatically. No custom build scripts, no Docker containers, no suffering.

Configuration Management

Application settings in Azure Functions just work. In the portal, go to your Function App → Configuration → Application settings. Add your production connection strings:

CosmosDBConnection: <your-cosmos-connection-string>

ServiceBusConnection: <your-service-bus-connection-string>

ApplicationInsights_InstrumentationKey: <your-app-insights-key>For secrets, use Key Vault references. Instead of putting the actual secret in the setting, you reference Key Vault:

DatabasePassword: @Microsoft.KeyVault(SecretUri=https://myvault.vault.azure.net/secrets/DatabasePassword/)Enable Managed Identity for your Function App, grant it access to Key Vault, and now your functions can read secrets without any code changes. Your local.settings.json still works for local development, but production secrets never leave Key Vault.

Monitoring and Troubleshooting

Application Insights Integration

If you created your Function App through the portal or VS Code, Application Insights is already connected. If not, it's one setting to add. The integration is ridiculously comprehensive:

- Every function execution is tracked automatically

- Dependencies (HTTP calls, database queries) are captured

- Custom metrics and traces with

context.logstatements - End-to-end transaction tracking across multiple functions

The Live Metrics view is nice. Open Application Insights, click "Live Metrics," and watch your functions execute in real-time. You see incoming requests, response times, and any exceptions as they happen. It's a helpful tool for real-time observability into your deployments.

Here's how to add custom telemetry:

import { AzureFunction, Context, HttpRequest } from '@azure/functions'

import { TelemetryClient } from 'applicationinsights'

const client = new TelemetryClient(process.env.APPINSIGHTS_INSTRUMENTATIONKEY);

const httpTrigger: AzureFunction = async function (

context: Context,

req: HttpRequest

): Promise<void> {

const startTime = Date.now();

try {

// Track custom event

client.trackEvent({

name: 'AppointmentProcessed',

properties: {

appointmentType: req.body.appointmentType,

patientId: req.body.patientId

}

});

// Your function logic here

// Track custom metric

client.trackMetric({

name: 'AppointmentProcessingTime',

value: Date.now() - startTime

});

} catch (error) {

// Exceptions are tracked automatically, but you can add context

client.trackException({

exception: error,

properties: {

functionName: context.executionContext.functionName,

invocationId: context.executionContext.invocationId

}

});

throw error;

}

};Debugging Production Issues

When something goes wrong in production (and it will), you have options:

Kudu Console: Your emergency access hatch. Navigate to https://[your-function-app].scm.azurewebsites.net. You can browse files, check environment variables, and even run commands. I once fixed a production issue by editing a config file directly through Kudu. Not recommended, but sometimes you need that nuclear option.

Log Streaming: In the Azure Portal, go to your Function App → Log stream. You see logs in real-time as your functions execute. It's basically a tail -f for your serverless functions.

Snapshot Debugging: For those "works on my machine" moments, enable Snapshot Debugging in Application Insights. When an exception occurs, it captures the entire state of your application—variable values, call stack, everything. You can open the snapshot in Visual Studio and debug it as if you were there when it happened.

Scaling and Performance

Consumption vs Premium vs Dedicated

Consumption Plan: Pay per execution, scales automatically, but can have cold starts. Perfect for sporadic workloads or getting started. In past projects, I've used this for functions that process nightly batch jobs.

Premium Plan: No cold starts, VNET integration, longer execution times (up to 60 minutes). Costs more but worth it for customer-facing APIs. I've moved APIs to Premium in the past after users complained about cold start delays.

Dedicated (App Service) Plan: Predictable pricing, runs on dedicated VMs. Use this when you have steady, predictable load or need specialized VM sizes.

Cold start comparison with Lambda? In my testing, Azure Functions on Premium plan have effectively zero cold start. Lambda's Provisioned Concurrency is similar but requires more configuration and planning.

Durable Functions Teaser

Regular functions are stateless, but Durable Functions let you write stateful functions in a serverless environment. Imagine orchestrating a complex appointment scheduling workflow:

import * as df from 'durable-functions'

const orchestrator = df.orchestrator(function* (context) {

const appointment = context.df.getInput();

// Run these in parallel

const tasks = [];

tasks.push(context.df.callActivity('ValidateProvider', appointment.providerId));

tasks.push(context.df.callActivity('CheckPatientEligibility', appointment.patientId));

tasks.push(context.df.callActivity('CheckScheduleAvailability', appointment));

yield context.df.Task.all(tasks);

// Wait for human approval if high-priority

if (appointment.priority === 'high') {

const approved = yield context.df.waitForExternalEvent('ApprovalEvent');

if (!approved) {

return { status: 'rejected' };

}

}

// Schedule the appointment

yield context.df.callActivity('ScheduleAppointment', appointment);

// Send notifications

yield context.df.callActivity('SendConfirmationEmail', appointment);

return { status: 'scheduled', appointmentId: appointment.id };

});This orchestration can run for days, survive restarts, and maintains state without you managing any infrastructure. It's mind-blowing when you first see it work.

Comparison of Azure Functions & AWS Lambda

After building production systems on both platforms, here's my take:

Where Azure Functions Wins

Developer Tooling: The local development experience is unmatched. The F5 debugging, local storage emulators, and seamless deployment from VS Code make development genuinely enjoyable.

Integrated Bindings: Declarative bindings reduce code complexity dramatically. You focus on business logic, not service integration.

Enterprise Integration: If you're already in the Microsoft ecosystem (Active Directory, Office 365, Teams), Azure Functions integrate naturally. Managed Identity just works with other Azure services.

TypeScript Support: First-class support without build pipelines or additional configuration.

Where Lambda Still Has Advantages

Ecosystem: More third-party triggers and integrations. If you need to trigger from a random SaaS product, they probably have a Lambda integration.

IAM Controls: AWS IAM is more granular and powerful than Azure RBAC, though also more complex.

Edge Computing: Lambda@Edge for CDN-integrated functions is powerful for certain use cases.

Community: Larger community means more Stack Overflow answers and blog posts.

The Verdict

Choose Azure Functions when developer experience and productivity matter most. Choose Lambda when you need specific AWS integrations or are already deep in the AWS ecosystem.

But honestly? If you're starting fresh and want to actually enjoy serverless development, Azure Functions will make you happier. I've seen developers who dreaded working with Lambda light up when they discovered how easy Azure Functions are to work with.

Common Pitfalls and How to Avoid Them

The "Works Locally, Fails in Azure" Scenarios

Connection String Mishaps: The classic. Your local.settings.json has UseDevelopmentStorage=true, but you forgot to set the actual storage connection string in Azure. Always check Configuration → Application settings first when debugging deployment issues.

Dependency Version Mismatches: NodeJS 18 locally but NodeJS 16 in Azure? You're gonna have a bad time. Always specify your exact runtime version:

{

"version": "2.0",

"extensionBundle": {

"id": "Microsoft.Azure.Functions.ExtensionBundle",

"version": "[4.*, 5.0.0)"

},

"extensions": {

"http": {

"routePrefix": "api"

}

}

}Timeout Configuration: The default timeout is 5 minutes on Consumption plan. That batch processing function that takes 6 minutes locally? It's getting killed in production. Either optimize it, split it up, or move to Premium plan (30 minute default, configurable up to 60).

Cost Optimization Tips

Avoid Runaway Costs: Set up budget alerts. I once had a bug that caused infinite recursion between two functions. The bill was... educational.

// DON'T DO THIS

const processTrigger: AzureFunction = async function (context: Context, message: any) {

// Bug: This causes infinite loop if processing fails

if (!isValid(message)) {

context.bindings.outputQueue = message; // Requeues to same queue!

return;

}

// ... rest of processing

};Storage Transactions Add Up: Every queue read, blob access, and table operation costs money. Batch operations when possible:

// Expensive: Multiple storage operations

for (const item of items) {

await container.createItem(item);

}

// Cheaper: Batch operation

await container.items.bulk(items.map(item => ({

operationType: "Create",

item

})));Know When to Move from Consumption to Premium: If you're executing more than 400,000 GB-s per month, Premium might actually be cheaper. Plus, no cold starts.

Next Steps and Resources

What to Build Next

Event-Driven Architectures with Service Bus: Move beyond HTTP triggers. Service Bus topics and subscriptions enable powerful pub/sub patterns.

API Management Integration: Put Azure API Management in front of your functions for rate limiting, authentication, and API versioning.

Durable Functions for Workflows: That appointment scheduling workflow I showed? Build it. Durable Functions will change how you think about serverless.

Real-time with SignalR Service Bindings: Push real-time updates to web clients without managing WebSocket connections.

Learning Resources

Microsoft Learn: The Azure Functions learning path is actually good. Not "vendor documentation good," but genuinely helpful.

GitHub Repo: Microsoft's Azure Samples repo has real, working examples for Azure Functions in several scenarios. Not the usual "Hello World" nonsense.

The Serverless Experience Developers Deserve

We've gone from zero to deployed in under an hour. You've built, debugged locally, and deployed real functions that process data and integrate with other services. More importantly, you did it without fighting your tools or deciphering cryptic error messages.

This is what serverless should have been from the start—focusing on writing business logic, not wrestling with infrastructure. Azure Functions delivers on that promise.

After years of fighting with serverless complexity, it's refreshing to find a platform that just lets you write code and ship it. The local development experience alone has saved me countless hours of deployment-debug cycles. The integrated bindings mean less boilerplate and fewer bugs. And the deployment options mean you can start simple and grow into enterprise-grade CI/CD when you need it.

Is Azure Functions perfect? No. You'll still hit occasional weird issues, the documentation could be better in places, and the Azure Portal can be overwhelming at first. But compared to the alternatives? It's not even close.

The next time you're starting a serverless project, give Azure Functions a try. Set aside an hour, follow this tutorial, and see if you don't find yourself thinking "why didn't I try this sooner?" Just remember to set those budget alerts... your future self will thank you. 😅

Now if you'll excuse me, I have some Lambda functions to migrate.